In a quiet move, LinkedIn automatically enrolled its users into a program that allows their content to be used for training AI models. If you didn’t receive a notification about this, you’re not alone. This change has sparked conversations about transparency, data privacy, and the ethical handling of user content.

With AI becoming an integral part of how platforms function, it’s no surprise that companies are collecting more data than ever before. But the question remains: When did we stop asking for permission?

LinkedIn’s Silent Opt-In

LinkedIn made a change to its settings, automatically opting users into a program that uses their content to train AI models. This means that everything you post on LinkedIn—whether it’s personal data, an article, a comment, or a status update—could be used to improve LinkedIn’s AI systems.

What’s troubling about this move is not the advancement of AI itself but how it was implemented. As far as we know, users weren’t informed or asked to consent; instead, LinkedIn silently opted them in. This type of behavior from large platforms can feel like a breach of trust. Shouldn’t users be given a chance to opt in voluntarily? This option is not one of those things that should be on by default.

Why This Matters: Data Privacy and User Control

Data privacy is a major concern in today’s digital world. Users have the right to know how their information is being used and to control whether they participate in certain programs.

While AI holds incredible potential—particularly in improving services and automating processes—platforms like LinkedIn must prioritize user consent. When companies collect data without explicit permission, they blur the line between innovation and exploitation.

LinkedIn’s recent move is part of a growing trend where platforms quietly change privacy policies or terms of service, assuming most users won’t notice or won’t take the time to adjust their settings. Sometimes, companies will send a generic email about a privacy policy update, sharing a link to the new terms, fully aware that most people won’t read through them. A more transparent approach would be to clearly highlight the changes, making them easy to understand without requiring users to sift through a lengthy 10+ page privacy policy.

This growing trend of quietly changing policies is worth paying close attention to because it sets a precedent for how data could be handled in the future—not just by LinkedIn but across the tech industry.

Opting In by Default vs. Giving Informed Consent

Automatically opting users into programs without their explicit consent can undermine trust. It shifts the responsibility from the platform to the user, placing the burden on you to find and change your settings. This is in stark contrast to ethical data practices, which prioritize informed consent.

Informed consent means that you are fully aware of what you’re agreeing to and are given a clear choice to opt in. In contrast, an opt-out culture assumes compliance unless you actively say no, which is what LinkedIn is counting on.

What’s more, the setting that enables your content to be used for AI training is buried deep in LinkedIn’s privacy settings, making it less likely that users will stumble across it on their own.

LinkedIn’s AI Training Program: What Does It Mean for You?

So, what does LinkedIn’s AI training program mean for you as a user? Essentially, LinkedIn uses your content—such as posts, articles, and other interactions—to improve its AI models. These models might power features like better content recommendations, more accurate search results, or other automated processes on the platform.

While that might sound beneficial, there are important considerations:

- Your data is being used without your explicit consent.

- You don’t know exactly how your content will be used or in what capacity.

- Once your content is used to train AI, it can’t be “unlearned” by the system.

This raises important questions about ownership, control, and the long-term implications of allowing your content to be used in this way. While some users may be comfortable with their content contributing to AI improvements, others might feel differently, especially without being asked first.

How to Opt Out: Protecting Your Content and Privacy

If you’re concerned about how your content is being used, you can opt out of LinkedIn’s AI training program. Here’s how to do it:

- Go to LinkedIn settings.

- Navigate to the “Data privacy” section.

- Look for the option titled “Data for AI improvement.”

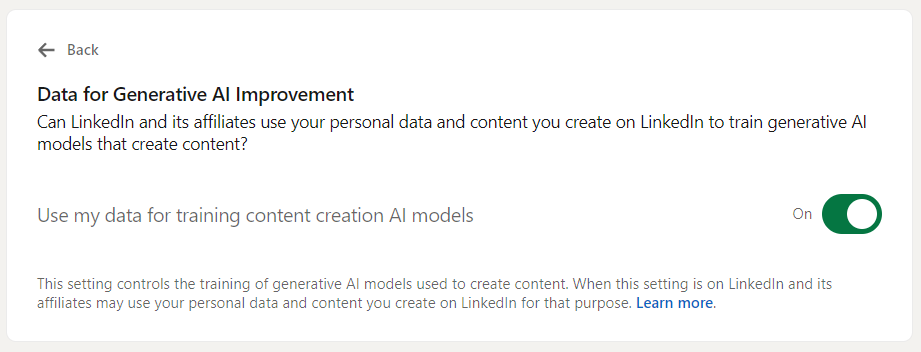

Toggle off the setting “Use my data for training content creation AI models.”

This setting controls whether LinkedIn can use your personal data and content to train AI models that create new content. By opting out, you maintain more control over how your content is used.

Here’s the link you can copy into your browser:

linkedin.com/mypreferences/d/settings/data-for-ai-improvement

Taking a few moments to review your privacy settings can give you peace of mind, knowing your data isn’t being used in ways you don’t approve of. It’s a good practice to regularly check your settings on other platforms too—many tech companies have similar policies.

The Bigger Picture: How Should Platforms Handle User Consent for AI?

The situation with LinkedIn reflects a larger issue with how tech companies handle user data. As AI continues to develop, the demand for high-quality data to train these models is only going to increase. But this should never come at the cost of user consent and trust.

Companies should implement clear, transparent communication and give users the opportunity to actively opt into data usage programs. This respects the user’s autonomy and fosters a relationship built on trust.

It’s not enough to bury important settings deep within privacy menus or assume that users won’t mind. The ethical approach is to explain exactly how user data will be used and provide the tools necessary for users to make informed choices.

We empower people to succeed through information and essential services. Do you need help with something? Contact Us.

Want a heads-up once a week whenever a new article drops?